Ansible AWX in Kubernetes

AWX

Since one year now, Red Hat open sourced Tower as AWX, the Web UI to deploy with Ansible.

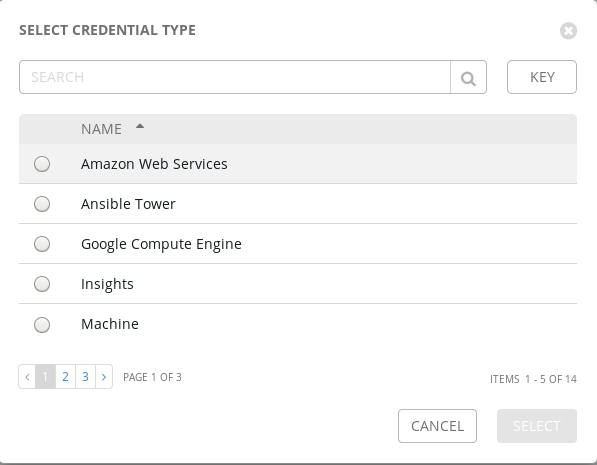

Awx allow you to manage all your Ansible projects, with inventories, encrypted credentials, playbooks, etc, in a great Web UI. For example, you can create in AWX multiple credentials which are encrypted into Awx database to store your :

- Ansible Vault password

- Google / Amazon / Azure cloud secret keys

- OpenStack tenant api key

- ssh deployment private key

- git ssh private key

- etc..

If all of theses credentials type are not sufficient, you can create your own custom credential type which can export environment variables or store secrets in a file when called.

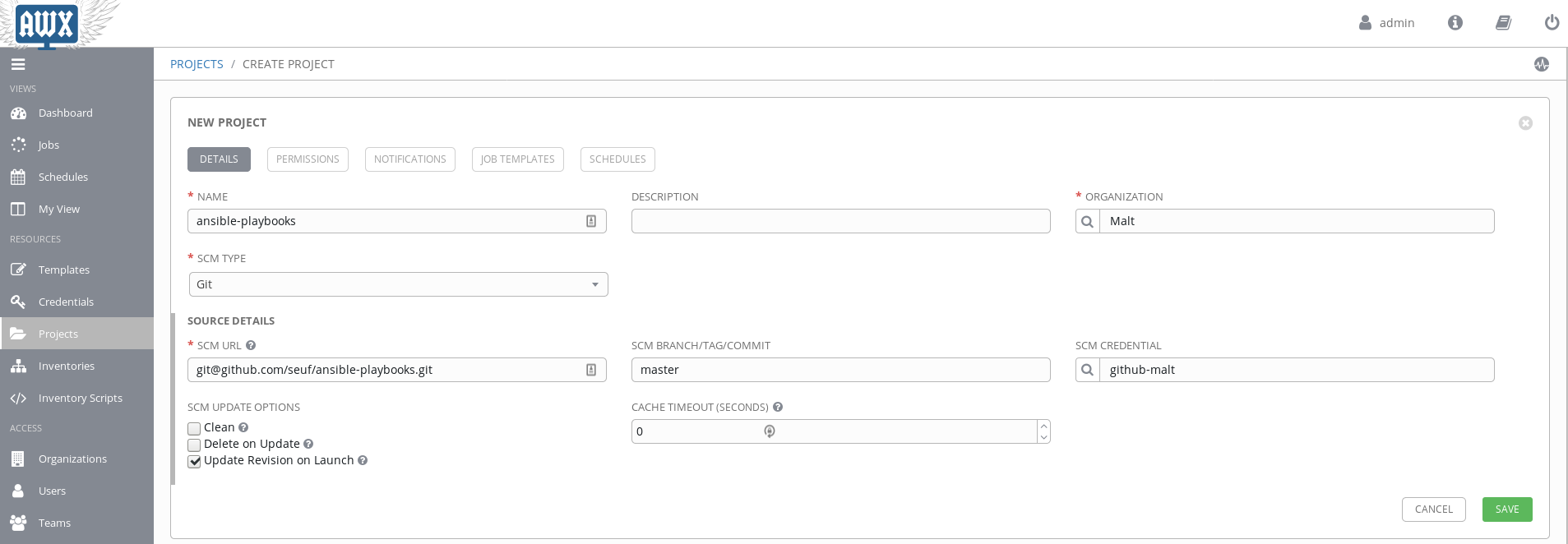

Once you have imported your credentials into Awx you can create a project sourced from your git repository which contain your playbooks, requirements and roles

Then create inventories with dynamic sources from your cloud provider or your versioned source code. You can even create custom inventory scripts

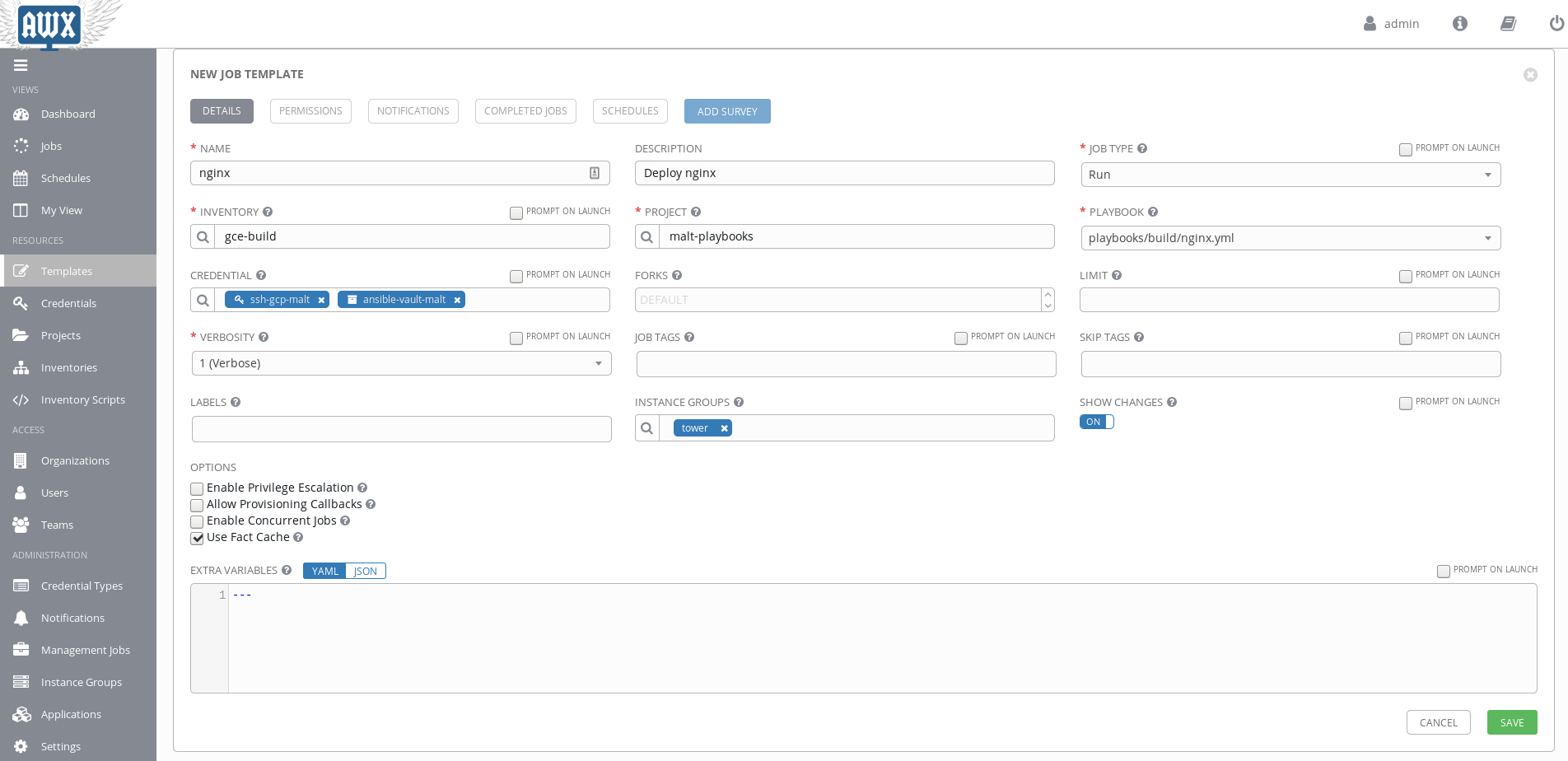

Before running Ansible playbooks into Awx, you have to create templates, linked to your playbooks, where users can override variables or inventories before running the playbook.

Kubernetes

Here at Malt, we plan to migrate our infrastructure to google cloud, So why no use the one click available Kubernetes Cluster from gcloud ? All our current deployment are made with a mix of Terraform for machine provisioning, Bamboo for application building and of courses Ansible for deployments.

We still use Terraform to pop the k8s cluster because there is a google_container_cluster resource available. You just have to write a Terraform template with a Kubernetes cluster definition and attach a node pool to it. Finaly type « terraform apply » and after a coffee, your cluster will be ready.

Now it’s time to deploy Awx into our fresh Kubernetes cluster. I’ve looked for a helm chart already available, but the only one I’ve found is in a pull requests on the official helm chart repository. I’ve tried this but unfortunately it did’nt work for me.

Awx also provide a Kubernetes deployment playbook, but it use a one big pod with everything inside (rabbitmq, memcached, web and task). The default ressources requested by each pod were too high ; None of our small « testing » node in our cluster can validate the requirements. Also we prefer to use a pod per service.

So I’ve created an ansible playbook and role to deploy and configure awx in kubernetes. This playbook is a mix of helm chart deployment for Postgresql database and custom kubectl configuration templates. We use Traefik as the IngressController with Let’s encrypt certificate auto-generation.

Ansible AWX Playbook

Traefik helm chart

First we need to automatically generate Let’s Encrypt TLS certificates. Like I said before, I’m a big fan of Traefik. Traefik can be configured with Kubernetes as a source for new endpoints. Bonus : Traefik can be installed with a helm chart ! All I had to do is override some defaults values of the helm chart to configure Traefik properly.

rbac:

enabled: true

loadBalancerIP: "{{ kubernetes_loadbalancer_ip }}"

kubernetes:

ingressClass: traefik

dashboard:

enabled: true

domain: "traefik.{{ dns_zone }}"

ingress:

annotations:

kubernetes.io/ingress.class: "traefik"

ssl:

enabled: true

enforced: true

acme:

enabled: true

email: "{{ acme_email }}"

staging: false

domains:

enabled: true

domainsList:

- main: "*.{{ dns_zone }}"

- sans:

- "{{ dns_zone }}"

challengeType: dns-01

delayBeforeCheck: 10

dnsProvider:

name: dyn

dyn:

DYN_CUSTOMER_NAME: "{{ dyndns_customername }}"

DYN_USER_NAME: "{{ dyndns_username }}"

DYN_PASSWORD: "{{ dyndns_password }}"

metrics:

prometheus:

enabled: trueAs you see Traefik will ask Acme Let’s Encrypt to generate a wildcard certificate, thanks to the dns-01 challenge Type. The load balancer IP will also be set to a reserved IP which is already configured into to *.{{ dnz_zone }}in our DNS provider.

I’ve create a tiny ansible helm role who just template a jinja template for helm values and install / upgrade the chart :

- name: check if helm already installed

shell: helm ls | awk '{ print $1}'

register: helm_charts_installed

- name: Install helm charts

shell: echo {{ lookup('template', '../../templates/helm/' + item.name + '-values.yml.j2') | quote }} | helm install --namespace {{ item.namespace }} --name {{ item.name }} {{ item.chart }} -f -

loop: "{{ helm_charts }}"

when: item.name not in helm_charts_installed.stdout_lines

- name: Upgrade helm chart

shell: echo {{ lookup('template', '../../templates/helm/' + item.name + '-values.yml.j2') | quote }} | helm upgrade --namespace {{ item.namespace }} {{ item.name }} {{ item.chart }} -f -

loop: "{{ helm_charts }}"

when: item.name in helm_charts_installed.stdout_linesI can call this role with a helm_charts variable like this :

- role: helm

helm_charts:

- name: traefik

namespace: kube-system

chart: stable/traefikPostgresql helm chart

AWX need a Postgresql database to work. Helm is providing an up to date postgresql chart which can be installed like Traefik with my tiny ansible role helm. Here is my postgresql helm chart values template :

postgresqlUsername: awx

postgresqlPassword: "{{ awx_pg_password }}"

postgresqlDatabase: awx

persistence:

size: "{{ awx_pg_volume_capacity|default('8') }}Gi"

metrics:

enabled: trueAWX Kubernetes Deployment

Now we have a Kubernetes Ingress controller with a wildcard DNS, a wildcard TLS certificate and a Postgresql database installed, we can deploy the awx docker images into Kubernetes.

So here are the differents Kubernetes Deployments templates :

Rabbitmq :

apiVersion: extensions/v1beta1

kind: Deployment

metadata:

name: rabbitmq-deployement

namespace: awx

labels:

app: rabbitmq

spec:

replicas: 1

selector:

matchLabels:

app: rabbitmq

template:

metadata:

labels:

app: rabbitmq

spec:

containers:

- image: {{ awx_rabbitmq_image }}

name: rabbitmq

env:

- name: RABBITMQ_DEFAULT_VHOST

value: awx

- name: RABBITMQ_ERLANG_COOKIE

value: cookiemonster

livenessProbe:

exec:

command: ["rabbitmqctl", "status"]

initialDelaySeconds: 30

timeoutSeconds: 10

readinessProbe:

exec:

command: ["rabbitmqctl", "status"]

initialDelaySeconds: 10

timeoutSeconds: 10

---

apiVersion: v1

kind: Service

metadata:

name: rabbitmq-svc

namespace: awx

spec:

selector:

app: rabbitmq

ports:

- protocol: TCP

port: 5672

targetPort: 5672Memcached :

apiVersion: extensions/v1beta1

kind: Deployment

metadata:

name: memcached-deployement

namespace: awx

labels:

app: memcached

spec:

replicas: 1

selector:

matchLabels:

app: memcached

template:

metadata:

labels:

app: memcached

spec:

containers:

- image: memcached:alpine

name: memcached

---

kind: Service

apiVersion: v1

metadata:

name: memcached-svc

namespace: awx

spec:

selector:

app: memcached

ports:

- protocol: TCP

port: 11211

targetPort: 11211Secrets :

---

apiVersion: v1

kind: Secret

metadata:

namespace: awx

name: "awx-secrets"

type: Opaque

data:

admin_password: "{{ awx_admin_password | b64encode }}"

pg_password: "{{ awx_pg_password | b64encode }}"

rabbitmq_password: "{{ awx_rabbitmq_password | b64encode }}"

rabbitmq_erlang_cookie: "{{ awx_rabbitmq_erlang_cookie | b64encode }}"

confd_contents: "{{ lookup('template', 'credentials.py.j2') | b64encode }}"

credentials.py.j2

DATABASES = {

'default': {

'ATOMIC_REQUESTS': True,

'ENGINE': 'django.db.backends.postgresql',

'NAME': "{{ awx_pg_database }}",

'USER': "{{ awx_pg_username }}",

'PASSWORD': "{{ awx_pg_password }}",

'HOST': "{{ awx_pg_hostname|default('postgresql-awx-postgresql') }}",

'PORT': "{{ awx_pg_port }}",

}

}

BROKER_URL = 'amqp://{}:{}@{}:{}/{}'.format(

"guest",

"guest",

"rabbitmq-svc.awx",

"5672",

"awx")

CHANNEL_LAYERS = {

'default': {'BACKEND': 'asgi_amqp.AMQPChannelLayer',

'ROUTING': 'awx.main.routing.channel_routing',

'CONFIG': {'url': BROKER_URL}}

}

ConfigMap :

apiVersion: v1

kind: ConfigMap

metadata:

name: awx-config

namespace: awx

data:

secret_key: {{ awx_secret_key }}

awx_settings: |

import os

import socket

ADMINS = ()

AWX_PROOT_ENABLED = True

# Automatically deprovision pods that go offline

AWX_AUTO_DEPROVISION_INSTANCES = True

SYSTEM_TASK_ABS_CPU = {{ ((awx_task_cpu_request|int / 1000) * 4)|int }}

SYSTEM_TASK_ABS_MEM = {{ ((awx_task_mem_request|int * 1024) / 100)|int }}

#Autoprovisioning should replace this

CLUSTER_HOST_ID = socket.gethostname()

SYSTEM_UUID = '00000000-0000-0000-0000-000000000000'

SESSION_COOKIE_SECURE = False

CSRF_COOKIE_SECURE = False

REMOTE_HOST_HEADERS = ['HTTP_X_FORWARDED_FOR']

STATIC_ROOT = '/var/lib/awx/public/static'

PROJECTS_ROOT = '/var/lib/awx/projects'

JOBOUTPUT_ROOT = '/var/lib/awx/job_status'

SECRET_KEY = file('/etc/tower/SECRET_KEY', 'rb').read().strip()

ALLOWED_HOSTS = ['*']

INTERNAL_API_URL = 'http://127.0.0.1:8052'

SERVER_EMAIL = 'root@localhost'

DEFAULT_FROM_EMAIL = 'webmaster@localhost'

EMAIL_SUBJECT_PREFIX = '[AWX] '

EMAIL_HOST = 'localhost'

EMAIL_PORT = '25'

EMAIL_HOST_USER = ''

EMAIL_HOST_PASSWORD = ''

EMAIL_USE_TLS = False

LOGGING['handlers']['console'] = {

'()': 'logging.StreamHandler',

'level': 'DEBUG',

'formatter': 'simple',

}

LOGGING['loggers']['django.request']['handlers'] = ['console']

LOGGING['loggers']['rest_framework.request']['handlers'] = ['console']

LOGGING['loggers']['awx']['handlers'] = ['console']

LOGGING['loggers']['awx.main.commands.run_callback_receiver']['handlers'] = ['console']

LOGGING['loggers']['awx.main.commands.inventory_import']['handlers'] = ['console']

LOGGING['loggers']['awx.main.tasks']['handlers'] = ['console']

LOGGING['loggers']['awx.main.scheduler']['handlers'] = ['console']

LOGGING['loggers']['django_auth_ldap']['handlers'] = ['console']

LOGGING['loggers']['social']['handlers'] = ['console']

LOGGING['loggers']['system_tracking_migrations']['handlers'] = ['console']

LOGGING['loggers']['rbac_migrations']['handlers'] = ['console']

LOGGING['loggers']['awx.isolated.manager.playbooks']['handlers'] = ['console']

LOGGING['handlers']['callback_receiver'] = {'class': 'logging.NullHandler'}

LOGGING['handlers']['fact_receiver'] = {'class': 'logging.NullHandler'}

LOGGING['handlers']['task_system'] = {'class': 'logging.NullHandler'}

LOGGING['handlers']['tower_warnings'] = {'class': 'logging.NullHandler'}

LOGGING['handlers']['rbac_migrations'] = {'class': 'logging.NullHandler'}

LOGGING['handlers']['system_tracking_migrations'] = {'class': 'logging.NullHandler'}

LOGGING['handlers']['management_playbooks'] = {'class': 'logging.NullHandler'}

CACHES = {

'default': {

'BACKEND': 'django.core.cache.backends.memcached.MemcachedCache',

'LOCATION': '{}:{}'.format("memcached-svc", "11211")

},

'ephemeral': {

'BACKEND': 'django.core.cache.backends.locmem.LocMemCache',

},

}

USE_X_FORWARDED_PORT = True

And finally AWX pods, services and ingres :

---

apiVersion: extensions/v1beta1

kind: Deployment

metadata:

name: awx-deployement

namespace: awx

labels:

app: awx

spec:

replicas: 1

selector:

matchLabels:

app: awx

template:

metadata:

labels:

app: awx

spec:

containers:

- image: ansible/awx_web:latest

name: awx-web

imagePullPolicy: Always

env:

- name: DATABASE_USER

value: {{ awx_pg_username }}

- name: DATABASE_NAME

value: {{ awx_pg_database }}

- name: DATABASE_HOST

value: {{ awx_pg_hostname|default('postgresql-awx-postgresql') }}

- name: DATABASE_PORT

value: "{{ awx_pg_port|default('5432') }}"

- name: DATABASE_PASSWORD

valueFrom:

secretKeyRef:

name: "awx-secrets"

key: pg_password

- name: RABBITMQ_USER

value: guest

- name: RABBITMQ_PASSWORD

valueFrom:

secretKeyRef:

name: "awx-secrets"

key: rabbitmq_password

- name: RABBITMQ_HOST

value: rabbitmq-svc

- name: RABBITMQ_PORT

value: "5672"

- name: RABBITMQ_VHOST

value: awx

- name: MEMCACHED_HOST

value: "memcached-svc.awx"

- name: MEMCACHED_PORT

value: "11211"

ports:

- containerPort: 8052

volumeMounts:

- name: awx-application-config

mountPath: "/etc/tower"

readOnly: true

- name: "awx-confd"

mountPath: "/etc/tower/conf.d/"

readOnly: true

resources:

requests:

memory: "{{ awx_web_mem_request }}Gi"

cpu: "{{ awx_web_cpu_request }}m"

- image: ansible/awx_task:latest

name: awx-task

imagePullPolicy: Always

command:

- /usr/bin/launch_awx_task.sh

securityContext:

privileged: true

env:

# - name: AWX_SKIP_MIGRATIONS

# value: "1"

- name: DATABASE_USER

value: {{ awx_pg_username }}

- name: DATABASE_NAME

value: {{ awx_pg_database }}

- name: DATABASE_HOST

value: {{ awx_pg_hostname|default('postgresql-awx-postgresql') }}

- name: DATABASE_PORT

value: "{{ awx_pg_port|default('5432') }}"

- name: DATABASE_PASSWORD

valueFrom:

secretKeyRef:

name: "awx-secrets"

key: pg_password

- name: AWX_ADMIN_USER

value: admin

- name: AWX_ADMIN_PASSWORD

valueFrom:

secretKeyRef:

name: "awx-secrets"

key: admin_password

- name: RABBITMQ_USER

value: guest

- name: RABBITMQ_PASSWORD

valueFrom:

secretKeyRef:

name: "awx-secrets"

key: rabbitmq_password

- name: RABBITMQ_HOST

value: rabbitmq-svc

- name: RABBITMQ_PORT

value: "5672"

- name: RABBITMQ_VHOST

value: awx

- name: MEMCACHED_HOST

value: "memcached-svc.awx"

- name: MEMCACHED_PORT

value: "11211"

volumeMounts:

- name: awx-application-config

mountPath: "/etc/tower"

readOnly: true

- name: "awx-confd"

mountPath: "/etc/tower/conf.d/"

readOnly: true

resources:

requests:

memory: "{{ awx_web_mem_request }}Gi"

cpu: "{{ awx_web_cpu_request }}m"

volumes:

- name: awx-application-config

configMap:

name: awx-config

items:

- key: awx_settings

path: settings.py

- key: secret_key

path: SECRET_KEY

- name: "awx-confd"

secret:

secretName: "awx-secrets"

items:

- key: confd_contents

path: 'secrets.py'

---

kind: Service

apiVersion: v1

metadata:

name: awx-svc

namespace: awx

spec:

selector:

app: awx

ports:

- name: http

protocol: TCP

port: 80

targetPort: 8052

---

apiVersion: extensions/v1beta1

kind: Ingress

metadata:

name: awx-ing

namespace: awx

annotations:

kubernetes.io/ingress.class: traefik

spec:

rules:

- host: awx.{{ dns_zone }}

http:

paths:

- path: /

backend:

serviceName: awx-svc

servicePort: 80Because awx_web and awx_tasks use an id based on the hostname to synchronise their tasks, (awx-manage provision_instance –hostname=$(hostname) ), I had to put the two docker images in the same pod.

Also The configMap templates came from the awx installer role.

All theses templates are rendered and sent to kubernetes with a simple task :

- name: Apply Deployment

shell: |

echo {{ lookup('template', item + '.yml.j2') | quote }} | kubectl apply -f -

loop:

- rabbitmq

- memcached

- secrets

- configMap

- awx

no_log: trueAwx configuration

Now we have Awx running into our Kubernetes cluster, it’s time to configure it, with ansible of course.

The Awx role use the uri module to confifure Awx via the API.

configure.yml

---

- name: configure authentication

uri:

method: PUT

url: "{{ awx_url }}/api/v2/settings/authentication/"

user: admin

password: "{{ awx_admin_password }}"

force_basic_auth: yes

body_format: json

body:

SESSION_COOKIE_AGE: 1800

SESSIONS_PER_USER: -1

AUTH_BASIC_ENABLED: true

OAUTH2_PROVIDER:

ACCESS_TOKEN_EXPIRE_SECONDS: 31536000000

AUTHORIZATION_CODE_EXPIRE_SECONDS: 600

ALLOW_OAUTH2_FOR_EXTERNAL_USERS: true

SOCIAL_AUTH_ORGANIZATION_MAP: null

SOCIAL_AUTH_TEAM_MAP: null

SOCIAL_AUTH_USER_FIELDS: null

- name: configure basic settings

uri:

method: PATCH

url: "{{ awx_url }}/api/v2/settings/all/"

user: admin

password: "{{ awx_admin_password }}"

force_basic_auth: yes

body_format: json

body:

ALLOW_OAUTH2_FOR_EXTERNAL_USERS: true

AUTH_BASIC_ENABLED: true

MANAGE_ORGANIZATION_AUTH: true

ORG_ADMINS_CAN_SEE_ALL_USERS: true

SESSION_COOKIE_AGE: 86400

SESSION_PER_USER: -1

TOWER_ADMIN_ALERTS: true

TOWER_URL_BASE: "{{ awx_url }}"

- include_tasks: configure_item.yml

with_items: "{{ awx_configuration }}"

configure_item.yml :

---

- name: get {{ item.config_name }} credential

uri:

method: GET

url: "{{ awx_url }}/api/v2/{{ item.config_type }}/?name={{ item.config_name }}"

user: admin

password: "{{ awx_admin_password }}"

force_basic_auth: yes

register: awx_config_register

- set_fact:

config_body: "{{ item.config_body }}"

- name: fetch {{ item.config_name }} dependencies

uri:

method: GET

url: "{{ awx_url }}/api/v2/{{ dep.type }}/?name={{ dep.name }}"

user: admin

password: "{{ awx_admin_password }}"

force_basic_auth: yes

register: awx_config_dependencies_register

when: item.config_dependencies is defined and awx_config_register.json.count == 0

loop: "{{ item.config_dependencies }}"

loop_control:

loop_var: dep

- name: set {{ item.config_name }} {{ item.config_type }} configuration body with dependencies

set_fact:

config_body: "{{ config_body | combine({res.dep.param: res.json.results[0].id}) }}"

loop: "{{ awx_config_dependencies_register.results }}"

loop_control:

loop_var: res

when: item.config_dependencies is defined and awx_config_register.json.count == 0

- name: configure {{ item.config_name }} {{ item.config_type }}

uri:

method: POST

url: "{{ awx_url }}/api/v2/{{ item.config_type }}/"

user: admin

password: "{{ awx_admin_password }}"

force_basic_auth: yes

body_format: json

status_code:

- 200

- 201

body: "{{ config_body }}"

when: awx_config_register.json.count == 0

With this tasks I can use a unique variable awx_configuration

which contain all my projects, repos, inventories, etc..

awx_configuration:

## credential git

- config_name: git-cred

config_type: credentials

config_body:

body:

name: "git-cred"

description: ""

organization: 1

credential_type: 2 #git

inputs:

username: "malt"

ssh_key_data: "{{ awx_ssh_private_key }}"

## credential ssh machine

- config_name: ssh-cred

config_type: credentials

config_body:

name: "ssh-cred"

description: ""

organization: 1

credential_type: 1 #machine

inputs:

username: "deploy"

ssh_key_data: "{{ awx_gcp_ssh_private_key }}"

ssh_key_unlock: "{{ awx_gcp_ssh_private_key_password }}"

## credential ansible vault

- config_name: ansible-vault-cred

config_type: credentials

config_body:

name: "ansible-vault-cred"

description: ""

organization: 1

credential_type: 3 #vault

inputs:

vault_password: "{{ awx_ansible_vault_password }}"

## credential gcloud inventory

- config_name: gce-cred

config_type: credentials

config_body:

name: gce-cred

organization: 1

credential_type: 10 # gce

inputs:

project: "default"

username: "ansible@account.iam.gserviceaccount.com"

ssh_key_data: "{{ awx_gcloud_private_key }}"

## projects

- config_name: playbooks

config_type: projects

config_dependencies:

- type: credentials

param: credential

name: git-cred

config_body:

name: playbooks

description: ""

scm_type: git

scm_url: git@git.malt.fr/ansible/playbooks

scm_branch: master

scm_clean: false

scm_delete_on_update: false

timeout: 0

organization: 1

scm_update_on_launch: true

scm_update_cache_timeout: 0

custom_virtualenv: null

## notification_templates

- config_name: slack

config_type: notification_templates

config_body:

name: "slack"

description: ""

organization: 1

notification_type: "slack"

notification_configuration:

channels:

- "#3-ops-ansible"

use_ssl: false

token: "{{ awx_slack_bot_token }}"

use_tls: false

## inventories

- config_name: gce-inventory

config_type: inventories

config_dependencies:

- type: credentials

param: credential

name: ansible-vault-cred

config_body:

name: gce-inventory

organization: 1

## inventory sources

- config_name: inventory-gce

config_type: inventory_sources

config_dependencies:

- type: credentials

param: credential

name: gce-cred

- type: inventories

param: inventory

name: gce-inventory

config_body:

name: "inventory-gce"

source: "gce"

source_regions: "all"

timeout: 0

verbosity: 1

update_on_launch: true

update_cache_timeout: 0

update_on_project_update: false

- config_name: inventory-static

config_type: inventory_sources

config_dependencies:

- type: inventories

param: inventory

name: gce-inventory

- type: projects

param: source_project

name: playbooks

config_body:

credential:

name: "inventory-static"

source: "scm"

source_path: "inventories"

source_regions: ""

source_vars:

overwrite_vars: true

verbosity: 1

update_on_launch: false

update_cache_timeout: 0

update_on_project_update: true

## jobs templates

- config_name: deploy

config_type: job_templates

config_dependencies:

- type: projects

param: project

name: playbooks

- type: credentials

param: credential

name: ssh-cred

- type: credentials

param: vault_credential

name: ansible-vault-cred

- type: inventories

param: inventory

name: gce-inventory

config_body:

name: "deploy"

job_type: "run"

playbook: "playbooks/deploy.yml"

verbosity: 1

extra_vars: "---\ndebug: true"

diff_mode: true

ask_variables_on_launch: trueNow everything is configured, I can login with the admin password I’ve set, and all my inventories / credentials / projects / etc.. are already set !

All I have to do is click on the rocket button to deploy all the other stuffs, and I will receive a Slack notification when the job is done !

I have a question about the first yaml file. This is my first time attempting to configure traefik in kubernetes, and curious what loadBalancerIP: « {{ kubernetes_loadbalancer_ip }} » is referring to exactly?

What are the pre-requisites for attempting this? I have a k8s cluster built out already with ceph storage, and only a few services running. I just really want to install AWX the way that you did.

the {{ kubernetes_loadbalancer_ip }} refer the global ip I’ve reserver with gcloud